AI is now deeply embedded in Britain’s national cyber defences. It sits quietly behind government servers, defence networks, banking systems, border security databases and national grid controls — scanning billions of data packets for signs of attack every second.

If those AI systems failed or were compromised, the UK would face a severe digital crisis that could cripple national infrastructure within hours. While the scenario may sound theoretical, it’s not far‑fetched: the more automation we use to defend ourselves, the more devastating failure becomes when automation fails.

The Role of AI in the UK’s Cyber Defences

AI as the Digital Watchdog

AI systems are used by the National Cyber Security Centre (NCSC) and the Ministry of Defence (MoD) to detect unusual patterns of behaviour across networks — for example:

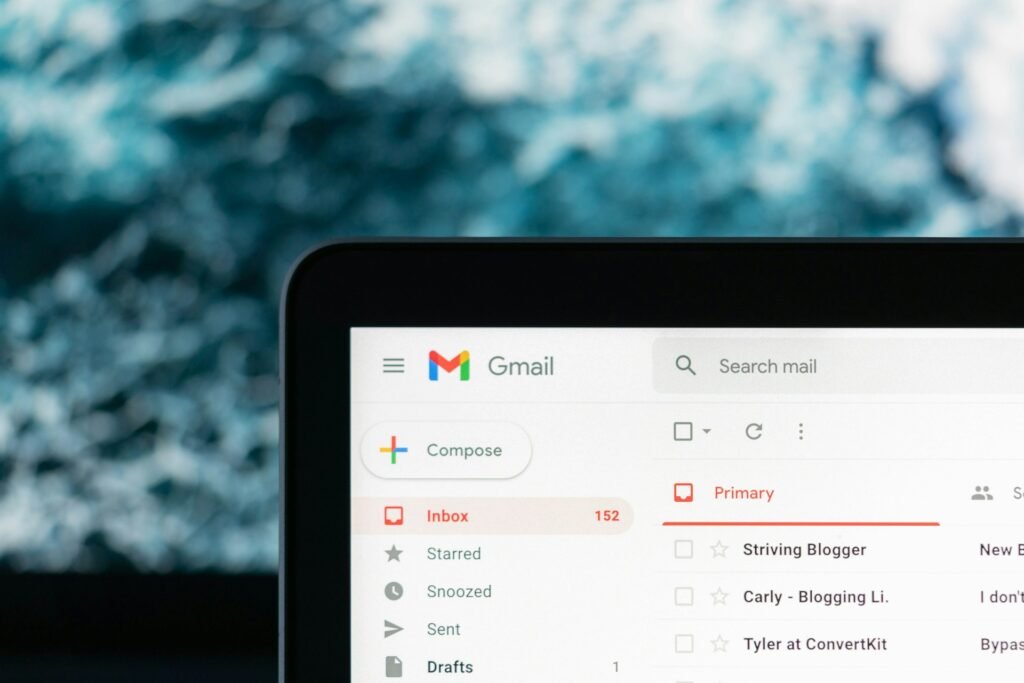

- Phishing campaigns or fake government emails.

- Malware propagation within Whitehall departments.

- Real‑time detection of intrusion on energy grid systems.

These AI models learn from past attacks and adapt immediately to new threats, outpacing human monitoring.

Critical Systems Protected by AI

- Finance: AI monitors suspicious monetary transfers across the Bank of England, clearing houses and payment systems.

- Transport: GPS and air‑traffic systems rely on AI to identify signal interference or spoofing.

- Defence: The Strategic Command’s Digital Warfare Unit uses AI to monitor cyber threats to satellites and communication lines.

- Public Services: NHS and local authority databases are secured using machine learning to detect breaches.

What Could Cause the AI Cyber Security to Fail?

Internal Technical Failure

A software malfunction — such as a corrupted AI model, outdated algorithm, or power outage in data servers — could disable automatic threat detection.

Because AI defences are integrated into everything from email filters to nuclear command encryption, even a brief system failure could leave networks temporarily blind to cyber‑intrusions.

External Manipulation

If adversaries managed to feed false information into these AI systems, the network could be tricked into:

- Ignoring genuine attacks (false negatives).

- Shutting down legitimate data flow (false positives).

This so‑called “data poisoning” is one of the most feared possibilities in modern cyber warfare, as it could mask a full‑scale hostile intrusion.

AI Model Compromise or Hijacking

Because AI defence software relies on cloud‑based learning, a breach of the UK government’s AI training servers could allow attackers to modify the very detection rules that keep the country safe.

Essentially, Britain’s digital immune system could be turned against itself.

Advertisement

What Would Be the Immediate Consequences?

1. Infrastructure Chaos

Should AI security go offline, hackers could exploit the window of opportunity to:

- Disrupt banking operations (blocking online payments or cash withdrawals).

- Interfere with air and rail signalling systems, causing nationwide delays.

- Attack utility companies, leading to short‑term power blackouts or water‑supply interference.

According to simulations by the Centre for the Protection of National Infrastructure (CPNI, 2025), a systemic AI cyber failure could cost the UK economy up to £2.8 billion within the first 48 hours.

2. Data Breaches and National Secrets

Confidential government, military, and people’s records would be highly vulnerable.

Hostile states or organised cyber‑criminal groups could exploit the chaos to extract sensitive information, including:

- Intelligence service operations.

- NHS medical databases.

- Industrial and defence blueprints.

3. Loss of Public Trust

If a high‑profile cyber‑attack happened during an AI failure, public confidence in the UK’s digital security and government competence would plummet.

This could trigger mass panic, particularly if digital services like HMRC tax systems or NHS databases went offline.

How Long Would It Take to Recover?

Stage 1 – Emergency Containment (Hours to Days)

The first step would be to isolate affected systems.

The NCSC, supported by GCHQ and MoD Cyber Command, would initiate manual override protocols, cutting internet links to major servers and reverting to human‑supervised systems.

During this stage, digital activity across government departments and financial markets might slow or even freeze.

It could take 24–72 hours for engineers to stabilise core networks and confirm which systems were safe to reconnect.

Stage 2 – System Restoration (Weeks)

AI defences would need to be wiped and rebuilt from secure backups.

New models would be retrained on verified data, a process that could take two to six weeks, depending on the extent of corruption or breach.

While this occurs, semi‑manual monitoring systems would protect the most sensitive national infrastructure.

Stage 3 – Forensic Audit and Policy Recovery (Months)

Teams from the Cabinet Office, Home Office, and Intelligence and Security Committee would perform an exhaustive forensic audit to trace how the failure happened, determine culpability, and realign national cyber strategy.

Realistically, full recovery to pre‑incident confidence could take up to a year, as new AI systems are tested, certified, and phased back into use.

Advertisement

How Would the UK Correct the Fault?

1. Deploy Backup AI Systems

Critical infrastructure operators are required under the National Cyber Strategy (2022–2030) to maintain redundant AI instances — essentially “shadow systems” that can take over instantaneously if primary defences fail.

These backups run on separate data servers, ensuring security continuity.

2. Human Cyber Teams Step In

Elite analyst units — such as the UK Cyber Reserve Force — would take manual control of network monitoring, reverting to traditional anomaly detection and intrusion prevention.

3. Patch, Purge, and Re‑train

Once the digital environment is secured, compromised AI models would be purged and replaced. Future training datasets would be verified by third‑party human auditors, possibly within allied intelligence frameworks like Five Eyes (UK, US, Canada, Australia, New Zealand).

4. Strengthen AI Verification Layers

Future AI systems would be fitted with explainability modules — transparent reporting layers showing why the algorithm blocked or allowed specific activity.

This guards against “black‑box” dependency and catches erratic decisions before they cascade into system‑wide faults.

Real‑World Implications and Risks

Economic Shock

A national cyber‑defence blackout wouldn’t just be a digital scare; it would have real‑world financial effects. Stock markets could suspend trading within minutes of suspicious network behaviour, global confidence in the City of London might dip, and data insurance claims could surge.

International Coordination

Britain’s allies would likely intervene quickly.

Under joint agreements with NATO and the EU Cyber Rapid Response Teams, digital assistance could arrive within hours to help rebuild the network or host temporary secure servers abroad.

Psychological Impact

Public anxiety would mirror a national‑scale power outage.

From disrupted online banking to delayed medical results and disabled travel systems, people would suddenly recognise how much daily life depends on invisible algorithms — and how vulnerable that dependence really is.

How the UK Is Reducing the Risk

- Redundant AI Security Layers: Multiple, isolated AI defence systems are designed to operate independently; if one fails, others assume control.

- Hybrid Oversight: No AI is allowed to control critical infrastructure without human supervisory approval, especially in defence, nuclear energy or air traffic.

- “Digital Resilience Exercises”: The NCSC conducts war‑game simulations to measure how long systems can operate under manual conditions.

- Investment in Explainable AI (XAI): To ensure future systems can justify decisions and expose corrupt data inputs in real time.

A Real‑World View

While official reports claim the UK could withstand a temporary AI cyber failure, the truth is that the nation’s resilience depends on speed and luck. A simultaneous multi‑sector attack — banking, health, and power — could overwhelm even backup protocols.

Recovery would happen, but only after major economic cost and public disruption, and the investigation would expose the tension at the heart of modern security: we trust machines to keep us safe, even though we can barely keep up with how they think.

References (UK and Official Sources)

- National Cyber Security Centre – Cyber Defence Overview (2025)

- UK Government – National Cyber Strategy 2022–2030

- Centre for the Protection of National Infrastructure – Resilience and AI Simulation Report (2025)

- GCHQ/NCSC – Cyber Threat to Critical Infrastructure Report (2024)

- House of Commons Defence Committee – Digital Warfare and Resilience Inquiry (2025)

Summary

| Impact Area | Immediate Effect | Recovery Timeline | Corrective Action |

|---|---|---|---|

| Financial Systems | Payment disruption, trading halts | 2–5 days | Network isolation & re‑authentication |

| Defence Networks | Loss of active threat detection | Weeks | Manual monitoring & AI retraining |

| Public Infrastructure | Delays, outages, limited services | Several weeks | Rebuild from secure backups |

| Government Data Integrity | Vulnerable to leaks & tampering | Months | Forensic audit & secure re‑encryption |

| Public Confidence | Serious loss of trust in institutions | Up to a year | Transparent communication & reforms |

In conclusion:

If the UK’s AI cyber‑security systems failed, national security would face immediate disruption. Recovery could take weeks for operations and months for confidence to return.

The correction process would depend on manual human control, redundant AI defences, and forensic system rebuilding.

AI makes Britain safer — but also more vulnerable to the single point of failure created by our faith in automated intelligence.