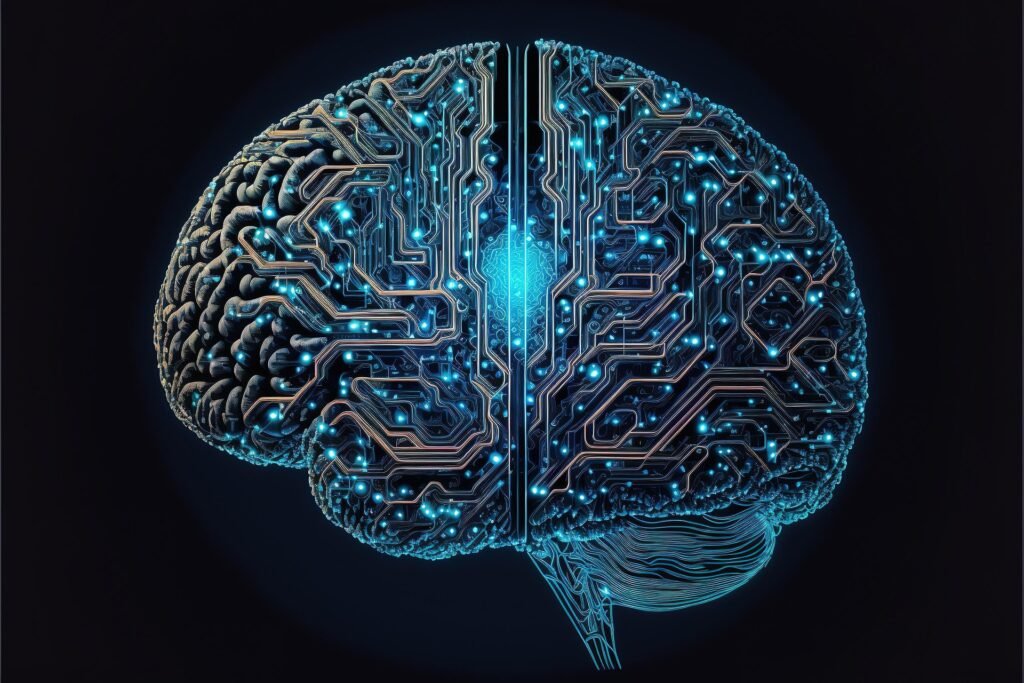

One third of adults in the UK are using artificial intelligence for emotional support or social interaction, according to research published by the AI Security Institute (AISI) in its first study.

The survey of 2,000 UK adults is based on two years of testing the abilities of more than 30 unnamed chatbots. It found that the type of AI tool people primarily turned to for emotional support and social interaction was chatbots such as OpenAI’s ChatGPT or devices such as Amazon’s Alexa.

The study found that people who used chatbots for emotional support experienced “symptoms of withdrawal”, such as anxiety and depression, when the technology didn’t work.

People discussed such symptoms on a Reddit online community focused on discussing AI companions, during a chatbot outage.

Also see: AI Influencers On The Rise in 2026

Security Flaws

As well as the emotional impact of AI use, AISI researchers looked at other risks caused by the tech’s rapidly accelerating capabilities.

The research shows there is genuine concern about AI enabling cyber attacks, but equally it can be used to help secure systems from hackers.

The study also looked at other areas of the technology, such as security, finding that AI’s ability to spot and exploit security flaws doubles roughly every eight months.

AI systems were also beginning to complete expert-level cyber tasks which would typically require over 10 years of expert experience to achieve.

Advertisement

- 【Strength & Recovery】Enhances grip strength, finger flexibility, and hand recovery—ideal for athletes, musicians, and re…

- 【Stress Relief】Squeeze to relieve stress, relax nerves, and improve focus anytime, anywhere.

- 【Premium Material】Made of soft, durable silicone—comfortable, tear-resistant, and easy to clean.

AI Capabilities

AISI examined whether models could carry out simple versions of tasks needed in the early stages of self-replication – such as “passing know-your customer checks required to access financial services” in order to successfully purchase the computing on which their copies would run.

But the AISA research found to be able to do this in the real world, AI systems would need to complete several such actions in sequence “while remaining undetected”, something its research suggests they currently lack the capacity to do.

Institute experts also looked at the possibility of models “sandbagging” – or strategically hiding their true capabilities from testers. They found tests showed it was possible, but there was no evidence of this actually happening.

Also see: AI Is Scary

Summary

People are relying on AI more and more and don’t evaluate the threat, they see it as their ‘friend’, entrusting their feelings and emotions with AI helping them. They use everyday now familiar tools they have in their homes and workplaces without considering it as possibly being harmful or unsafe.

Could AI initiate a cyber attack unseen where as another AI will defend against that attack, it certainly seems feasible in the future.

Opinion

It doesn’t bode well for people, the study shows that people are willing to trust AI regardless of the consequences simply for convenience and ease. The idea of trusting a computer with a voice that is capable of responses to answer your questions about your deepest feelings and emotions would have seem preposterous only maybe five years ago, but now it’s fine!

The research shows above else there is simply no way of knowing what’s coming.